We are excited to be presenting our research on Obstacle Avoidance for Drones at the IEEE SEM 2015 Fall Conference, 5-6PM, Nov 17. The talk is titled

“Obstacle Detect, Sense, and Avoid for Unmanned Aerial Systems”

Abstract:

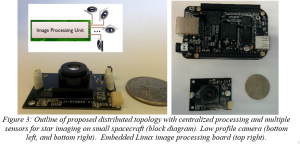

Drones, or Unmanned Aerial Systems (UAS), are expected to be adopted for a wide range of commercial applications and become an aspect of everyday life. The Federal Aviation Administration (FAA) regulates airspace access of unmanned systems and has put forward a road map for UAS adoption for commercial use. It is expected that vehicles flying outside line-of-sight be capable of sensing and avoiding other aircraft and obstacles. Whether the UAS is autonomous or remotely piloted, it is expected that drones become capable of safe flight without depending on communication links which are susceptible. Therefore, sensor technologies and real-time processing and control approaches are required on board unmanned aircraft to provide situational awareness without depending on remote operation or inter-aircraft communication. This talk overviews some research activities at the University of Michigan Dearborn to address this challenges. We are developing a stereo-vision system for obstacle detection on aerial vehicles. Using stereo video (3D video), a depth map can be generated and used to detect approaching objects that need to be avoided. We are also developing a visual navigation approach to enable drones to navigate in GPS denied environments, such as between buildings or indoors. Also, a virtual “bumper” system is being developed to over-ride commands being given by an in-experienced pilot in the case of an impending crash. Such a system could help prevent incidences such as the video drone crash at the last US Open Tennis Championships.

Conference Time and Venue:

Tuesday Evening, November 17, 2015, From 4:00 PM to 9:00 PM

University of Michigan – Dearborn

Fairlane Center – North Building

19000 Hubbard Drive, Dearborn,

Michigan 48126

More information on conference agenda can be found at the main page, and in this flyer.