In this page, we provide the dataset that is used in our work titled: High-Temporal-Resolution Object Detection and Tracking using Images and Events. The dataset provides a synchronized stream of event data and images captured using the event-based hybrid sensor, DAVIS 240c (specifications provided here). Furthermore, we provided manually annotated ground truth (object class, bounding box, object ID).

Main applications: Object detection and/or object tracking for various vehicle object types (cars, trucks, vans, etc.) using either events or images or a combination of both.

Event camera used: Dynamic and Active-Pixel Vision Sensor (DAVIS) 240c combines a grayscale camera, known as Active Pixel Sensor (APS), as well as the event-based sensor DVS, using the same pixel array. The resolution of the camera is 240×180 pixels. Images are captured at around 24 frames per second (FPS), whereas events are captured asynchronously with a temporal resolution of 1 µs.

Dataset summary: scene A contains 32 sequences with 9274 images and 6828 annotations, while scene B contains 31 sequences with 3485 images and 3063 annotations, totaling 9891 vehicle annotations.

– Note: The difference between the number of images and annotations is due to the frames that do contain any objects.

Dataset Setup

The data was collected at two different scenes at the campus of the University of Michigan-Dearborn. In both scenes, the event camera is placed on the edge of a building while pointing downwards at the street, representing an infrastructure camera setting. No ego-motion is applied to the camera (the camera is static). A sample of each scene’s view is shown below.

The data was collected using DAVIS 240c and the ROS DVS package developed by Robotics and Perception Group (link). The data was initially collected and saved in several ROS bags, which we later processed by extracting the images and event data collected, then splitting the temporally synchronized data into shorter sequences presented here. These shorter sequences remove the periods of no objects present or moving in the scene.

- Note 1: this data also contains some pedestrians, which are not labeled yet and were out of the scope of our work. A future version of this dataset will include pedestrian labels and some sequences that mainly contain pedestrians.

- Note 2: In scene A, the vehicles parked at the top are not labeled due to them being static and their relative size, also, any vehicles moving behind them are not labeled either. Overall, the top 15-20% of the frame can be ignored in your application.

Data Format

The data captured of each scene is split into 30+ short sequences (one folder for each). Each sequence’s folder is named as the timestamp of the first image it contains.

Directories Structure

The dataset directories are structured as follows:

Traffic Sequences/

--> Scene A/

------> 1581956305832790936/ (seq 1 folder)------------>1581956305832790936_events.csv (events file)

-------------> 1581956305832790936_imageTimes.csv (image times/names file)

-------------> (images 1 - n . png)

------> 1581956366514475936/ (seq 2 folder)

------> ...

--> Scene B------> ...

gt/

--> Scene A/------> 1581956305832790936/ (seq 1 folder)------------>------------>------------>

--> Scene B/------> ...

Grayscale image frames

Per each sequence folder, images are formatted as:

- Filename: timestamp in nanoseconds of capture time.

- Format: .png

We also provide an extra CSV file containing the image names/times of a given sequence in order of time (oldest first). This file is formatted as:

- Filename: <sequence_folder_name>_imageTimes.csv

- 1 image per line formatted as:

<image number> <image name or timestamp in ns>- <image number> can be ignored as it is based on the image number in the initially captured ROS bags.

Events

Each sequence folder contains a CSV file that includes all of the events captured during the duration of the given sequence, formatted as:

- Filename: <sequence_folder_name>_events.csv

- 1 event per line with each event formatted as:

<timestamp in ns>,<x coordinate>, <y coordinate>, <polarity>

Note: In the raw event data stream collected, we noticed that a few sequences had an issue causing of a stuck pixel giving consecutive redundant events with the same X and Y pixel coordinates and polarity, which is not possible within such a short time (sometimes multiple at the same exact timestamp). Thus we filtered out these faulty events from the provided data.

Ground Truth Labels

Per each sequence, we provide the manually annotated labels in 3 different file formats:

- COCO annotations in JSON file format:

- MOTChallenge format:

- 1 label per line per image

- Each line is formatted as:

<frame no.>, <object id>, <bb_left>, <bb_top>, <bb_width>, <bb_height>, <conf>, <x>, <y>, <z><x>, <y>, <z>are world coordinates that are ignored here in our 2D dataset. Set as -1.<conf>detection confidence (not needed here) is set as -1 for ground truth data.

- Reference: https://motchallenge.net/instructions/

- Custom format:

- 1 line per each image/frame at a given timestamp T.

- Each line would contain the frame’s time in ns and the frame’s id, as well as any labeled objects at that instant.

- Each line is formatted as:

<frame_time_ns>, <frame_id>,<object1_id>, <bb1_left>, <bb1_top>, <bb1_width>, <bb1_height>,<object2_id>, <bb2_left>, <bb2_top>, <bb2_width>, <bb2_height>, …

High-temporal-resolution ground truth: we also provide high-temporal-resolution ground truth data in the MOTChallenge format and as well as our custom format. These are generated by temporally interpolating our ground truth labels per each unique object being tracked, based on a constant acceleration model.

The initial rate is 24 Hz (named “-mot24.txt” and “-custom24.txt”) is similar to the labels provided in the COCO format file, while the other rates are 48, 96, 192, and 384 Hz (e.g. named “-mot48.txt” and “-int48.txt” for 48 Hz tracking resolution, and so on, based on the desired rate).

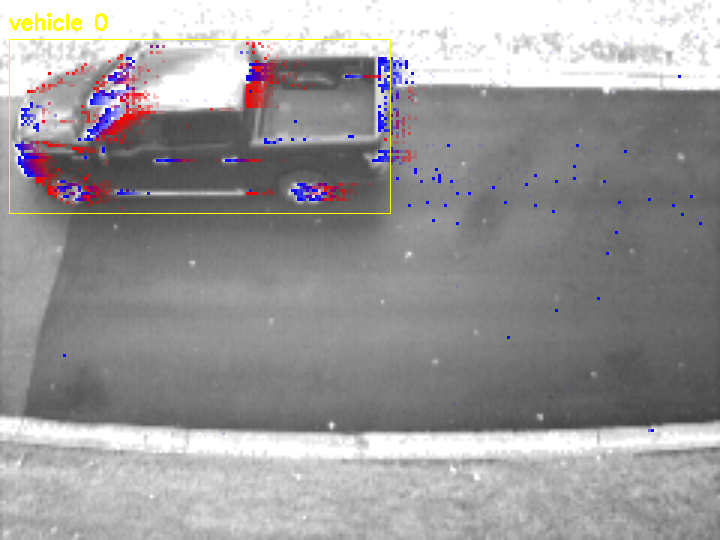

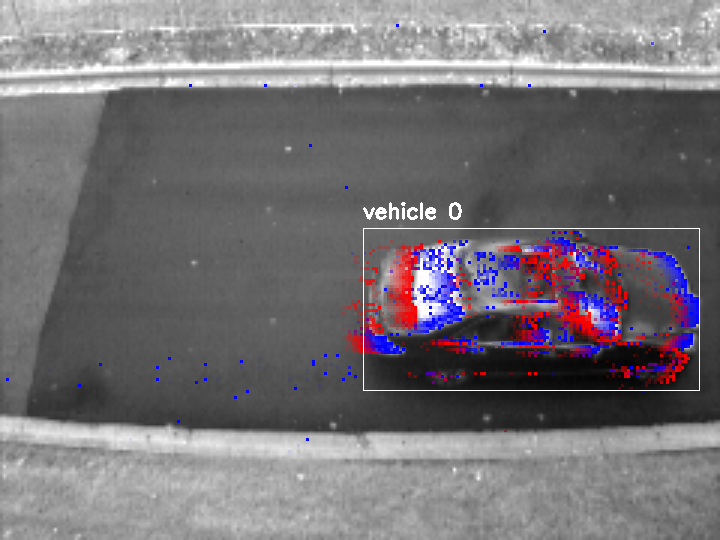

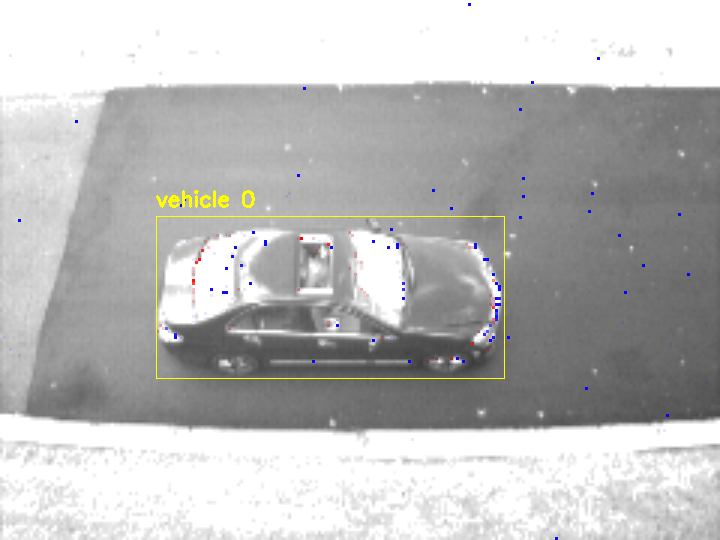

Data Samples

Below we demonstrate some samples from our collected data with the ground truth annotations including objects’ bounding box and unique ID for tracking.

Scene A:

Scene B:

The events are overlayed on top images. The events demonstrated in each sample are the ones captured during the last ~42 ms (the time between 2 consecutive frames for images captured at 24 fps). The older events are more transparent.

Below we demonstrate the inter-frame GT labels provided in this dataset at 96 Hz (slowed down for visualization). Accordingly, 4x the GT labels’ temporal resolution compared to at 24 Hz (labels reset whenever a new image is available).

Download

The dataset can be downloaded using this link.

Citation

If you use our work, please consider citing this publication:

Bibtex:

@article{el2022high,

title={High-Temporal-Resolution Object Detection and Tracking Using Images and Events},

author={El Shair, Zaid and Rawashdeh, Samir A},

journal={Journal of Imaging},

volume={8},

number={8},

pages={210},

year={2022},

publisher={MDPI}

URL = {https://www.mdpi.com/2313-433X/8/8/210},

ISSN = {2313-433X},

DOI = {10.3390/jimaging8080210}

}

Chicago:

El Shair, Zaid, and Samir A. Rawashdeh. "High-Temporal-Resolution Object Detection and Tracking Using Images and Events." Journal of Imaging 8, no. 8 (2022): 210. https://doi.org/10.3390/jimaging8080210

Support: Please feel free to reach out to me at zelshair@umich.edu if you have any questions or need additional information.